This project is currently a work in progress! More information (including source code) will be provided once we have published.

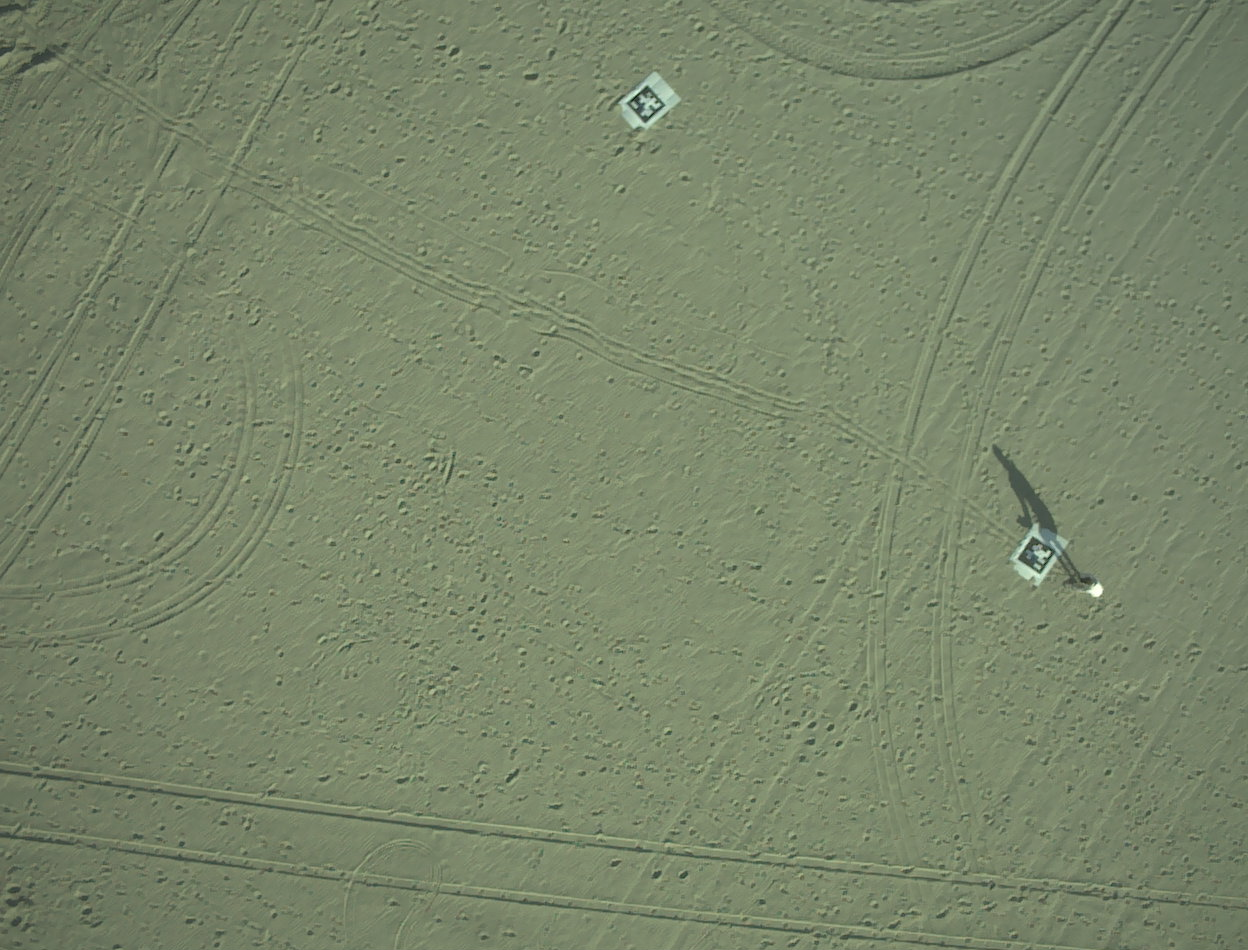

Collecting detailed imagery of a survey area often involves flying an aerial vehicle to take multiple pictures from a top-down perspective and then stitching them together in a process called orthomosaicing. This process has been well studied, but usually requires distinct, static features in the raw imagery to localize each image with respect to its neighbors. However, it is infeasible to use this approach when imaging bodies of water, which are oftentimes dynamic and feature-sparse. The RESL aquatics group is working on a new method for orthomosaicing over dynamic landscapes by dropping floating, instrumented ground control points (GCPs) across the target area before the aerial survey.

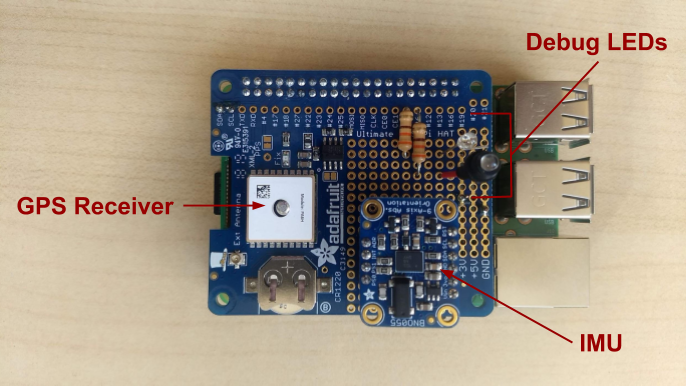

These instrumented ground control points are composed of a large April tag and a device that logs GPS and IMU data. Additionally, the imagery from the drone will provide relative position estimates between the drone camera and the GCP.

Surprisingly, we have not found a COTS solution for logging GPS and IMU data at our price range (<$100 per unit), so we had to make our own logger. Our emphasis is on using cheap, easily accessible sensors and improving the solution quality with advanced state estimation techniques. This allows us to use hobbyist-level electronics, specifically a Raspberry Pi with an Adafruit GPS Hat and BNO055 9-DoF IMU.

Using a factor-graph solver from the GTSAM library, we construct a smoothing and mapping problem that uses the odometry from the drone and GCPs, and the visual distance estimates between the drone and GCPs to jointly minimize the error in the pose of the drone and camera. Using the refined pose estimates of the camera allow us to create a much more accurate orthomosiac than if we relied only on the drone’s odometry data.

If you have questions about our approach or framework, please email me!